Martin Christie – Digital Imaging Lead - Colourfast We always take a little break from the magazine over Christmas and New Year on the basis that not much is happening. However, while we’ve been resting plenty has been going on in the world outside and as regular readers will know I often like to stray away from the subject title of this column to talk about other things that affect us to some degree or another.

I seem to have been writing about Artificial Intelligence for some time and using it in some part for much longer, but more recently the newly elected leaders of our western part of the world have started talking about it like they’ve known about it all along.

Once upon a time you only had to put the word digital in front of something to make it sound modern and progressive, and anything without it would seem antique and out of date. The flaw was not everything that wasn’t digital was redundant, nor was everything that was the perfect answer to all our problems. You have to know the pros and cons to make a choice.

You also have to know if the choice is being made for you. The insidious feature of AI, and its induction in many parts of public exchange, is that we may not be aware that decisions are being made by a calculating machine rather than human judgment or be able to question its authority.

Of course, since the advent of computers this pattern has been increasing, and there is no question that it has been a source of great progress in so many areas improving the quality of life in real terms. But the speed of this innovation will increase massively and in so many unpredictable ways with AI. We know from so many customers at the counter that lots of people struggle to cope with often quite basic tasks.

Indeed, when septuagenarian President Trump introduced his own multi-billion initiative his grasp of the subject was underlined when he suggested that everyone should write it down in their notepads so they don’t forget the name.

There is a certain logic to the computers we have been working with up to now, even if it’s not always obvious. The computers that are to come will be faster and smarter. But that doesn’t mean they will be plotting against us, they will in fact be trying to learn from us, and the decisions they come to will be based on our actions. We will in effect be our own crash test dummies.

We humans generally learn more from our mistakes than our successes. And while in theory AI should do the same, it remains to be seen whether this will be so in practice, especially in the longer term as small errors may become larger ones, compounded because they have previously been passed as correct.

So much for the global view. How will AI impact upon the print on demand world? If applied with some imagination it could be a massive aid in preparing digital images for actual print - being able to examine content in minute detail instantly and automatically correct not just size and shape, but colour and density in order to produce a perfect output saving time and resources as well as often frustration and wastage.

To achieve this, however, may require a much greater investment from print suppliers than they are prepared to expend as we are not seen as a priority industry compared to other high profile ones, which are also more profitable. The frustration is that the technical possibilities already exist, it’s just the effort required to apply them to print workflow.

We have had automatic exposure in digital cameras for years, it even exists to one degree or another in your ordinary copier or scanner, even if it is has to be prompted to work properly. Essentially it judges the brightest and the darkest parts of an image and tries to balance out the two to produce an average. It’s not rocket science, but even phone cameras can now be programmed to recognise people, landscapes and the like and adjust to know they are shooting at night.

In comparison, printing software has hardly changed over the decades, but improving it requires more thought than just adding a few basic filters. It needs overcoming some of the regular error strewn actions that cause so many problems. Automated pre-flight checks are all very well, but we have had them for years. It needs something more intelligent, and in keeping with the second quarter of the twenty-first century, but whether our industry can embrace it, or afford it, is another question. Driven by demand we tend to be obsessed by speed rather more than accuracy, but it surely must be possible to have both rather than accept that mistakes are just part of the process. It will be very much down to how we - and AI - learn.

|

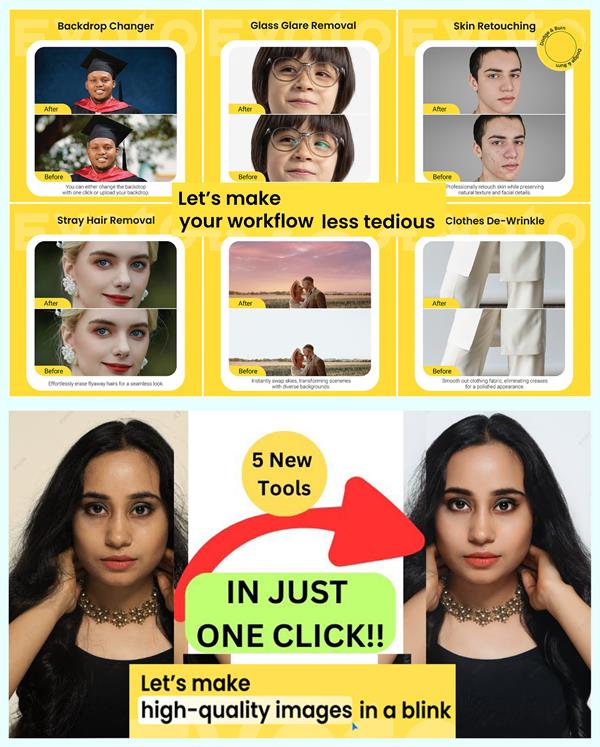

We have editing software that can identify individual persons from groups and features as small as teeth and eyes, as well as remove blemishes, and other unwanted marks. But who or what decides what is an unsightly spot and not a cute freckle? I keep seeing promotions for magic software that will transform any portrait into a work of art at the press of a button, but to me they all look so much like the early days of photoshop clones when for a short while some people did think that plastic skin was attractive. Surely processors can be taught to be smarter than that.

There are green shoots of promise amid the acres of popular gimmicks which are always present when the market is guided more by commercial interest than intelligence but we shall see whether they survive and prosper.

I’ll be an interested visitor at next month’s Photography and Video Show at London’s Excel Centre (8-11 March) to take stock of the current state of the image market. When I first visited the original version of the exhibition film was still dominant, and over the years I witnessed the transition to digital which mirrored my own progression with camera and kit. Having not been since before lockdown, it will be fascinating to see where the industry is now as being primarily a consumer show, it more reflects when customers are asking for, rather than a trade print event which is much more biased towards what the manufacturers want to sell you.

A few years ago photobooks were meant to be the next big thing, but apart from weddings they have failed to reach the targets the hype promised as they have been dismissed by people who prepare to share things at a distance via social media, rather than gather round a table top publication however lavish and well presented.

Another one shot wonder was a phone company that famously claimed that it had re-invented photography and that the camera was dead and buried - including some graphic images of its graveside demise in its advertising.

Camera technology, however, is very much at the cutting edge, ironically partly because of the decline of sales of big bulky bodies and lenses, and the need to produce high quality output from increasingly small devices including drones. I expect a lot of them buzzing around the exhibition hall.

In fact, I think the photography market may well have found a bit of balance, where there is still a place for ‘proper’ cameras for more professional use rather than being a fashion accessory for the gadget hungry collector.

I do expect more than a few new companies promising to take my photography to a whole new level with their revolutionary software at supposedly reasonable prices, but will be equally surprised if they can show me something I don’t already know how to do.

I often find at exhibitions it’s the little unexpected things that you find much more useful so fingers crossed there will be some hidden treasure somewhere to make it a worthwhile journey.

I will be surprised if there is anything new in the scanning department - even if there are any scanners there at all they are likely to be just revamped older models.

Scanning is very much the poor relation of digital printing but still an important part as we know and continue to resist the desire of manufacturers who would prefer to make a machine without a more expensive piece of glass on top.

Originally everything was done by casting an electric eye over an original item and producing a hard copy. Nowadays, though most things are done directly to print, there is still a need for capturing an exact image of an actual object in order to reproduce it.

There is a misconception that a scanner is just an enormous camera, represented by the size of the glass, but that is far from the truth. The size of the scanning area simply reflects the size of the copy that can be scanned. Quite literally it merely indicates the width of a mirror that moves across the platen area and projects the complete image into a comparatively small optical lens.

The scanner is basically a digital camera. The difference is that in the last twenty years camera technology has developed massively, the basic flatbed copier has not.

The scanner works much like a camera in that it passes an electronic eye across an image and registers it dot by dot. How detailed this capture becomes is largely determined by the speed of the pass - but not entirely. The slower the mirror moves the more dots can be filled in, meaning a higher resolution. But this doesn’t necessarily equate to greater clarity, as detail, colour and the like are also dictated by the processor and its ability to turn that binary information into an accurate image file.

A modern camera, even one in a phone, can take an extremely clear picture and it does it at the blink of an eye.

For some years I have been using digital photography to capture large or delicate originals, including artworks, maps and three dimensional objects.

I have the advantage of a high resolution DSLR, professional studio lighting and accessories and a gaming spec pc to handle the editing of very large files. That and of course many decades of experience behind the lens understanding how light falls on surfaces, and how that related colour can be reproduced on paper. Not the resources of your average print shop, but it has made me realise how far the poor scanner has fallen behind.

In theory, AI introduced into a scanner should be able to better understand and reproduce the original, rather than having to do it post capture in software like Photoshop and Lightroom which are years ahead in terms of pixel information. Whatever file type you choose, a scan comes effectively pre-processed by the scanner so you have to manually adjust and correct any exposure deviations. Top end cameras can shoot a RAW format that comes virtually untouched so has a much greater window for colour and clarity for example.

Scanners also scan in a single pass, producing a directional shadow on anything with a relief or texture, and while editing software can work magic to stitch together several parts of a larger image with merging technology, unless you can scan those parts in the same plane any irregular cast will completely ruin it.

All in all Artificial Intelligence still has plenty to learn, so it will be an interesting adventure.

https://www.photographyshow.com